Fundamentals of Controllable Text Generation

Author: Murat Karakaya

Date created: 21 April 2021

Last modified: 24 May 2021

Description: This is an introductory tutorial on Controllable Text Generation in Deep Learning which is the second part of the “Controllable Text Generation with Transformers” series. This series will focus on developing TensorFlow (TF) / Keras implementation of Controllable Text Generation from scratch. You can access all these parts from my blog at muratkarakaya.net.

Before getting started, I assume that you have already reviewed:

- the tutorial series “Text Generation methods in Deep Learning with Tensorflow (TF) & Keras”

- the tutorial series “Sequence-to-Sequence Learning”

- the previous parts in this series on my blog at muratkarakaya.net

Please ensure that you have completed the above tutorial series to easily follow the below discussions.

Accessible on:

PART A2: Fundamentals of Controllable Text Generation

What is the difference between Text Generation and Controllable Text Generation?

When generating samples from a Language Model (LM) by iteratively sampling the next token, we do not have much control over attributes of the output text, such as the topic, the style, the sentiment, etc.

However, many applications would demand good control over the LM output.

For example, if we plan to use an LM to generate reading materials for kids, we would like to guide the output stories to be safe, educational, and easily understood by children.

The main difference between Text Generation and Controllable Text Generation is the ability to control the LM output attributes.

What is Controllable Text Generation?

Controllable text generation is the task of generating natural sentences whose attributes can be controlled. For example, we can define some attributes of the text to be generated such as:

- stylistic: politeness, sentiment, formality, etc.

- demographic: attributes of the person writing the text such as gender, age, etc.

- content: topics, information, keywords, entities, tense, etc.

- ordering: information, events, etc. in summaries or stories

For example, in this work, the authors train an LM such that it can control the tense (present or past) and attitude (positive or negative) of the generated text. Some experiment results are given below:

Below, you see another sample for generating poems from this work.

Application Areas

Neural Machine Translation: Machine translation is automatically translating from one language to another.

Image Captioning: When an image is given, the model generates a text describing the image. You can access the below demo from this link.

Dialogue response generation (Chatbot) task controlling

- persona,

- various aspects of the response such as politeness, formality, authority etc,

- grounding the responses in an external source of information

- and controlling the topic sequence

The below example is taken from Andrea Madotto, Zhaojiang Lin, Yejin Bang, Pascale Fung, The Adapter-Bot: All-In-One Controllable Conversational Model

Story generation where you can control

- the ending

- the persona

- the plot

- the topic sequence

The below example is taken from Nanyun Peng, Marjan Ghazvininejad, Jonathan May, Kevin Knight, Towards Controllable Story Generation

Modify text such as emails, reports, etc. written by a human

- formality

- politeness

- pulling disparate source documents into a coherent unified one

The below example is taken from Zhiqiang Hu, Roy Ka-Wei Lee, Charu C. Aggarwal, Aston Zhang, Text Style Transfer: A Review and Experimental Evaluation

How can we design and train a Controllable Text Generator?

Actually, there are many proposals to design a Controllable Text Generator. However, we first need to understand the guidelines and principles.

Below, I will share the best practices to design a Controllable Text Generation model. But first, let’s learn an important Machine Learning approach.

What is Semi-Supervised Learning?

According to Wikipedia:

Semi-supervised learning is an approach to machine learning that combines a small amount of labeled data with a large amount of unlabeled data during training. Semi-supervised learning falls between unsupervised learning (with no labeled training data) and supervised learning (with only labeled training data).

We can use the Semi-supervised learning approach to train the Controllable Text Generator as discussed below.

A Generic Methodology: Semi-Supervised Learning

Labeled data are crucial for today’s machine learning. Thanks to the Internet, collecting data is easy, but scalably labeling that data is very hard, time-consuming, and expensive.

Labeling the collected data is only feasible to generate labels for important problems where the reward is worth the effort, like machine translation, speech recognition, self-driving, etc.

Therefore, Machine learning researchers aim at developing unsupervised learning algorithms to learn a good representation of a dataset, which can then be used to solve tasks using only a few labeled examples.

In designing our training a Controllable Text Generator, we can follow a 2-step approach:

- Step 1: We train a large unsupervised next-step-prediction Language model on large amounts of unlabelled data.

We expect that the language model will have a good representation of the unlabelled data. - Step 2: we can use these language models with smaller labeled data to re-purpose them to generate controllable text.

The above approach is an example of Semi-supervised learning.

Moreover, in Step 1, we can use a pre-trained language model instead of training from scratch.

In practice, we often have access to a pre-trained generative language model such as Bert or GPT. These models have already learned the distribution over token sequences by optimizing for the next token prediction.

The most crucial part of designing a controllable text generator is to decide how to use, modify, or re-purpose these pre-trained models.

We can now talk about some details about these steps.

How to train an unsupervised language model with unlabelled text data?

In general, we have two options:

- Option A Causal Language Models

- Option B Masked Language Models

Option A: Causal Language Models

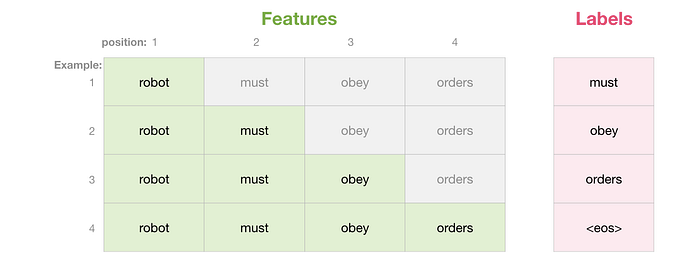

Causal language modeling is the task of predicting the next token with high accuracy for a given input token sequence. In this way, the model only attends to the left context (tokens on the left of the mask). Such training is particularly interesting for generation / predicting the next (rightmost) token.

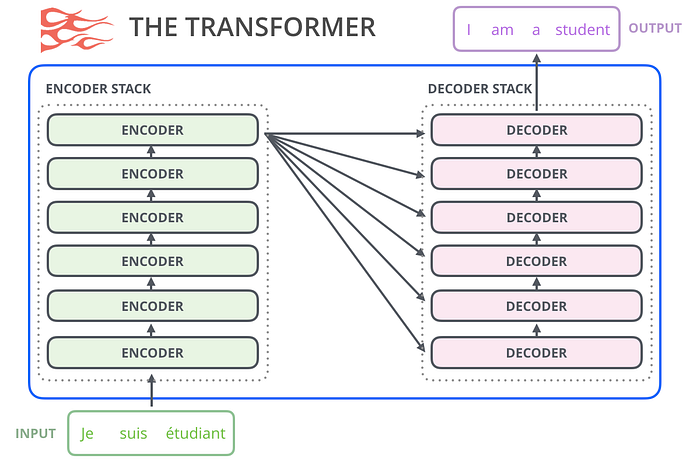

For these kinds of models, we mostly use the Decoder part of a transformer since it has a masked self-attention layer that masks the right-side of a token while training.

The below image indicates the masked self-attention layer on a token sequence.

The most famous application of this approach is the GPT family of language models.

The above image is taken from the Jay Alammar blog which I strongly recommend you if you want to understand how a transformer works in detail.

IMPORTANT:

Observing the above image, one can ask the question:

You mentioned that we are using unlabeled data in training but in the image we have the labels! Are there any mistakes?

Nope! There is no mistake. However, we need to clarify what is “labeling” in the context of supervised learning.

In my definition,

labeling is attaching a metadata to the data which does not exist in the raw data

For instance, in an image data set, each image file actually has RGB values for each pixel. We can attach to an image file a label according to the content of the file, e.g. dog. The raw data (image file) does not have this metadata. Someone should label the data using outside information!

On the other hand, in our example, the corpus has the text as the raw data. We can tokenize the raw data and use the same token as the label to predict the next one. However, this label does exist in the raw data. Thus, we do not consider this kind of process as “labeling”.

Option B: Masked Language Models

We can train a language model with unlabeled data to predict a token given at any position, not only at the end of the sequence (rightmost)!

The most famous application of this approach is the Bert family of language models.

For these kinds of models, we can use the Encoder part of a transformer since it can apply self-attention to the whole token sequence during training.

If you want to go deeper about the Transformer architecture, please visit this blog by Jay Alammar, The Illustrated GPT-2 (Visualizing Transformer Language Models)

How to tune an unsupervised Language Model as a Controllable Language Model?

Below, I will briefly explain the methods to fine-tune an unsupervised Language Model as a Controllable Language Model. If you need a more comprehensive explanation, take a look at this video: Plug and Play Language Models: A Simple Approach to Controlled Text Generation and this blog post.

In the upcoming parts, we will implement these approaches in TensorFlow & Keras.

What are the strategies to employ a pre-trained Language Model as a Controllable Language Model?

There many proposals in the literature to apply some transformation on pre-trained Language Models to have a Controllable Language Model. The classification of these proposals is not straightforward yet.

One way to classify these strategies suggested by this post as follows:

- Apply guided decoding strategies and select desired outputs at test time.

- Optimize for the most desired outcomes via good prompt design.

- Fine-tune the base model or steerable layers to do conditioned content generation.

Which parts of a Language Model can be modified to control the generated text?

In this work (video, paper), the authors provide a new schema of the pipeline of the generation process by classifying it into five modules. The control of attributes in the generation process requires modification of these five modules (see below).

According to this work, we have five modules that can be used for controlling the generation process:

- External Input module is responsible for the initialization $h_0 $, of the generation process.

- Sequential Input module is the input $x_t$ at each time step of the generation.

- Generator Operations module performs consistent operations or calculations on all the input at each time step.

- Output module is the output of which is further projected onto the vocabulary space to predict the token $o_t$ at each time step.

- Training Objective module takes care of the loss functions used for training the generator.

Don’t Worry!

We will delve into these strategies when we implement them by using Python Tensorflow Keras in upcoming parts.

You can check the above references to the sample studies for each strategy. In addition, you can visit Lilian Weng’s blog post and watch this video for a summary of the related work.

Moreover, please note that controlling (conditioning) a language model is still an open research question. Each strategy above has certain pros & cons.

What kinds of Deep Learning Models can be used as a Controllable Text Generator?

You can implement the above strategies by modifying some existing Neural Network paradigms.

One famous approach in Neural NLP is to apply sequence-to-sequence learning (Seq2Seq). The implementation of Seq2Seq learning mostly depends on the encoder-decoder paradigm. To apply the encoder-decoder paradigm, we can use different methods such as:

- Recurrent Neural Networks (RNN: e.g., LSTM / GRU)

- Transformers (e.g., GPT / BERT)

We have also other paradigms in Artificial Neural Networks such as:

- Generative Adversarial Methods (GAN),

- Reinforcement Learning (RL),

- Variational Autoencoder (VAE),

- etc.

We can adopt these architectures to build a Controllable Text Generation model. We can decide the location of the modification on an existing model following the above two classifications.

Don’t Worry!

We will discuss the above concepts and models when we implement sample Controllable Text Generation models by using Python Tensorflow Keras in the upcoming parts.

Controllable Text Generation Summary

- So far, we have reviewed the important concepts, approaches, and methods related to the Controllable Text Generation in Deep Learning.

- We will design and build a TensorFlow Data Pipeline for training a Controllable Text Generator in the next part.

- After then, we will implement a Controllable Text Generator with a Recurrent Neural Network (LSTM) model in Python, TensorFlow & Keras. We will adopt the LSTM Language Model model’s Sequential Input to control the generated text.

Thus, if you want to learn how to code a Controllable Text Generator with various Deep Learning models, please follow up on all the parts of the “Controllable Text Generation Tutorial series”.

Comments or Questions?

Please share your Comments or Questions.

Thank you in advance.

Do not forget to check out the next parts!

Take care!

References

Language Models:

- Yoshua Bengio, Réjean Ducharme, Pascal Vincent, Christian Janvin, A neural probabilistic language model

- Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova, BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- A. Radford, Karthik Narasimhan, Improving Language Understanding by Generative Pre-Training (GPT)

- A. Radford, Jeffrey Wu, R. Child, David Luan, Dario Amodei, Ilya Sutskever, Language Models are Unsupervised Multitask Learners (GPT-2)

- Tom B. Brown, et.al., Language Models are Few-Shot Learners (GPT-3)

- Jay Alammar, The Illustrated GPT-2 (Visualizing Transformer Language Models)

- Murat Karakaya, Encoder-Decoder Structure in Seq2Seq Learning Tutorials: on YouTube in English or Turkish. You can also access these tutorials on muratkarakaya.net.

- Sebastian Ruder, Recent Advances in Language Model Fine-tuning

- Jackson Stokes, A guide to language model sampling in AllenNLP

- Jason Brownlee, How to Implement a Beam Search Decoder for Natural Language Processing

Text Generation:

- Murat Karakaya, Text Generation with different Deep Learning Models Tutorials: on YouTube in English or Turkish. You can also access these tutorials on muratkarakaya.net.

- Apoorv Nandan, Text generation with a miniature GPT

- Nicholas Renotte, Generate Blog Posts with GPT2 & Hugging Face Transformers | AI Text Generation GPT2-Large

- Mariya Yao, Novel Methods For Text Generation Using Adversarial Learning & Autoencoders

- Guo, Jiaxian and Lu, Sidi and Cai, Han and Zhang, Weinan and Yu, Yong and Wang, Jun, Long Text Generation via Adversarial Training with Leaked Information

- Patrick von Platen, How to generate text: using different decoding methods for language generation with Transformers

- Discussion Forum, What is the difference between word-based and char-based text generation RNNs?

- Papers with Code web page, Text Generation

- Ben Mann, How to sample from language models

Controllable Text Generation:

- Neil Yager, Neural text generation: How to generate text using conditional language models

- Alec Radford, Ilya Sutskever, Rafal Józefowicz, Jack Clark, Greg Brockman, Unsupervised Sentiment Neuron

- Sumanth Dathathri, Andrea Madotto, Janice Lan, Jane Hung, Eric Frank, Piero Molino, Jason Yosinski, and Rosanne Liu, Plug and Play Language Models: A Simple Approach to Controlled Text Generation, video, code

- Ivan Lai, Conditional Text Generation by Fine-Tuning GPT-2

- Lilian Weng, Controllable Neural Text Generation

- Shrimai Prabhumoye, Alan W Black, Ruslan Salakhutdinov Exploring Controllable Text Generation Techniques, video, paper

- Muhammad Khalifa, Hady Elsahar, Marc Dymetman, A Distributional Approach to Controlled Text Generation video, paper

- Abigail See, Controlling text generation for a better chatbot

- Alvin Chan, Yew-Soon Ong, Bill Pung, Aston Zhang, Jie Fu, CoCon: A Self-Supervised Approach for Controlled Text Generation, video, paper

- Nanyun Peng, Marjan Ghazvininejad, Jonathan May, Kevin Knight, Towards Controllable Story Generation

- Andrea Madotto, Zhaojiang Lin, Yejin Bang, Pascale Fung, The Adapter-Bot: All-In-One Controllable Conversational Model

- Zhiqiang Hu, Roy Ka-Wei Lee, Charu C. Aggarwal, Aston Zhang, Text Style Transfer: A Review and Experimental Evaluation

Controllable Text Generation with Guided Decoding (Sampling) Strategy:

- Marjan Ghazvininejad, Xing Shi, Jay Priyadarshi, Kevin Knight, Hafez: an Interactive Poetry Generation System

- Ari Holtzman, Jan Buys, Maxwell Forbes, Antoine Bosselut, David Golub, Yejin Choi, Learning to Write with Cooperative Discriminators

- Jiwei Li, Will Monroe, Dan Jurafsky, Learning to Decode for Future Success

- Ashutosh Baheti, Alan Ritter, Jiwei Li, Bill Dolan, Generating More Interesting Responses in Neural Conversation Models with Distributional Constraints

- Clara Meister, Tim Vieira, Ryan Cotterell, If beam search is the answer, what was the question?

- Jiatao Gu, Kyunghyun Cho, Victor O.K. Li, Trainable Greedy Decoding for Neural Machine Translation

- Aditya Grover, Jiaming Song, Alekh Agarwal, Kenneth Tran, Ashish Kapoor, Eric Horvitz, Stefano Ermon, Bias Correction of Learned Generative Models using Likelihood-Free Importance Weighting

- Yuntian Deng, Anton Bakhtin, Myle Ott, Arthur Szlam, Marc’Aurelio Ranzato, Residual Energy-Based Models for Text Generation

Controllable Text Generation with Smart Prompt Design Strategy:

- Taylor Shin, Yasaman Razeghi, Robert L. Logan IV, Eric Wallace, Sameer Singh, AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts

- Xiang Lisa Li, Percy Liang, Prefix-Tuning: Optimizing Continuous Prompts for Generation

- Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin, Semantically Equivalent Adversarial Rules for Debugging NLP models

- Zhengbao Jiang, Frank F. Xu, Jun Araki, Graham Neubig, How Can We Know What Language Models Know?

Controllable Text Generation with Fine-tuning Strategy:

- Angela Fan, Mike Lewis, Yann Dauphin, Hierarchical Neural Story Generation

- Nanyun Peng, Marjan Ghazvininejad, Jonathan May, Kevin Knight, Towards Controllable Story Generation

- Nitish Shirish Keskar, Bryan McCann, Lav R. Varshney, Caiming Xiong, Richard Socher, CTRL: A Conditional Transformer Language Model for Controllable Generation

- Marc’Aurelio Ranzato, Sumit Chopra, Michael Auli, Wojciech Zaremba, Sequence Level Training with Recurrent Neural Networks

- Khanh Nguyen, Hal Daumé III, Jordan Boyd-Graber, Reinforcement Learning for Bandit Neural Machine Translation with Simulated Human Feedback

- Sanghyun Yi, Rahul Goel, Chandra Khatri, Alessandra Cervone, Tagyoung Chung, Behnam Hedayatnia, Anu Venkatesh, Raefer Gabriel, Dilek Hakkani-Tur, Towards Coherent and Engaging Spoken Dialog Response Generation Using Automatic Conversation Evaluators

- Nisan Stiennon, Long Ouyang, Jeff Wu, Daniel M. Ziegler, Ryan Lowe, Chelsea Voss, Alec Radford, Dario Amodei, Paul Christiano, Learning to summarize from human feedback

- Anh Nguyen, Jeff Clune, Yoshua Bengio, Alexey Dosovitskiy, Jason Yosinski, Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space

- Sumanth Dathathri, Andrea Madotto, Janice Lan, Jane Hung, Eric Frank, Piero Molino, Jason Yosinski, Rosanne Liu, Plug and Play Language Models: A Simple Approach to Controlled Text Generation

- Lianhui Qin, Vered Shwartz, Peter West, Chandra Bhagavatula, Jena Hwang, Ronan Le Bras, Antoine Bosselut, Yejin Choi, Back to the Future: Unsupervised Backprop-based Decoding for Counterfactual and Abductive Commonsense Reasoning

- Jeffrey O Zhang, Alexander Sax, Amir Zamir, Leonidas Guibas, Jitendra Malik, Side-Tuning: A Baseline for Network Adaptation via Additive Side Networks

- Yoel Zeldes, Dan Padnos, Or Sharir, Barak Peleg, Technical Report: Auxiliary Tuning and its Application to Conditional Text Generation

- Ben Krause, Akhilesh Deepak Gotmare, Bryan McCann, Nitish Shirish Keskar, Shafiq Joty, Richard Socher, Nazneen Fatema Rajani, GeDi: Generative Discriminator Guided Sequence Generation